Kant

Dec 13, 2005Immanuel Kant introduced the human mind as an active originator of experience rather than just a passive recipient of perception. How has his philosophy influenced the world after him?

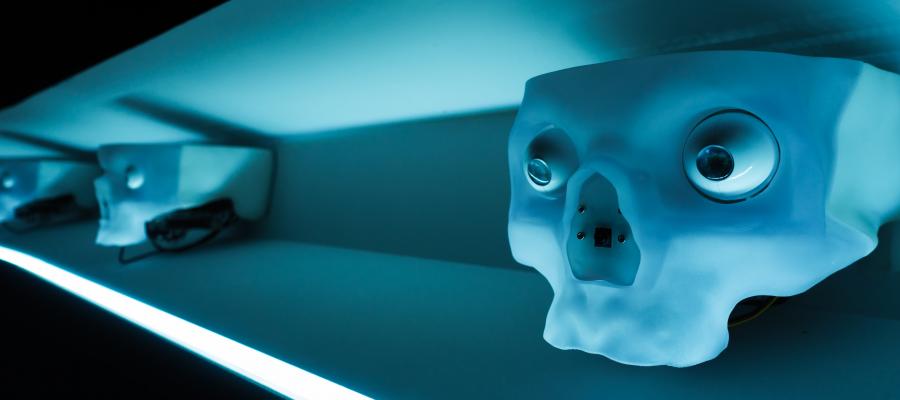

About two months ago, I posed a philosophical puzzle (as I’ve been doing during the Corona crisis) that had to do with whether it’s morally acceptable to lie to robots. My puzzle was addressed at Immanuel Kant’s moral philosophy, which seems to say that lying is always wrong (and under the right interpretation—there’s the rub—it does say that).

The puzzle was this: if lying is always wrong (for Kant), would it be wrong (according to Kant’s theory) to lie to a robot with speech technology who came to your door trying to locate innocent people who were hiding from a tyrannical government?

On the one hand, you would be uttering a falsehood, which seems like lying. On the other hand, it’s just a robot, and the “lie” is for protecting innocent people. So, given his theory, what should Kant say you should do?

At the time I posed the puzzle, I also did two other things.

I promised to reach out to some Kant scholars to see what they would say about it.

I predicted that the Kant scholars would say (or imply) that I had butchered Kant’s thinking on the matter.

I can now proudly say that I have made good on my promise, and I have received extremely interesting replies from some excellent Kant scholars: Allen Wood (of University of Indiana), Eric Wilson (of my own department at Georgia State University), and Anna Wehofsits (of the the Ludwig-Maximilians-Universität in Munich).

But it is only with ambivalent pride that I can say that my prediction was correct: all three Kant scholars implied that I had butchered Kant’s theory! In particular, the interpretive presuppositions behind my puzzle were wrong.

I was taking Kant’s moral theory as being meant to give some sort of recipe or formula for what’s right or wrong to do. But that’s not the right way of looking at his ethical work. Rather—and this point was pushed on me by both Eric Wilson and Allen Wood—the main aim of Kant’s views on lying is to explain what it is of moral worth that is at stake when it comes to uttering falsehoods. Then, once we know what is at stake, we must apply our own judgment to individual cases to know whether it is wrong to utter a falsehood in a given circumstance. As Eric Wilson put it, “there is no substitute for sound judgment.”

All three Kant Scholars were also clear with me on the following point. Kant never meant to claim that every single falsehood one might utter counts as a lie. So even though lying (in the sense in which Kant intended that term) is always wrong, it doesn’t follow that it is always wrong to utter a falsehood. Importantly, the famous “Nazi at the door case” might be a situation in which, due to extenuating circumstances, uttering a falsehood does not count as lying in the morally loaded way in which Kant means the term (though here there is some debate). Effectively, then, Kant has made the word “lie” (German: lügen) into a term of art, which then comes to mean (i) to knowingly utter a falsehood and (ii) to do it in a way that dishonors something that is morally important.

This is where what’s morally at stake comes in.

The two things of moral worth that one might violate or dishonor when uttering a falsehood are foremost these:

One’s duty to oneself.

One’s duty to uphold the system of law.

The first of these duties is general. Lying does oneself some measure of dishonor or debasement. Insofar as that’s true, it is morally wrong in that it violates one’s duty to oneself.

The second of these is more specific, and it appears that some people who hold that Kant thinks uttering a falsehood is never morally acceptable are misconstruing points he made that were targeted at a more specific context: namely, declarations one makes in a legal setting. Kant’s point is that if one tells falsehood under oath, one is not only doing wrong to the specific people one is lying to (and to oneself)—but also to the system of right and law that is of value in and of itself. After all, pervasive falsehood would be especially corrosive to the functioning of necessary legal systems. So the system of law counts as a further moral stake when one is weighing whether uttering a falsehood in a given situation is wrong.

So where does this leave Kant in relation to our hypothetical situation of potentially “lying” to a robot to protect an innocent family?

Interestingly, none of those I asked gave a clear-cut answer. Allen Wood was very clear that we shouldn’t craft general principles from unlikely cases, but that doesn’t resolve the unlikely case. Nevertheless, I think we have the resources on the table for addressing the question on our own.

We should ask: Would uttering a falsehood to the robot violate one’s duty to oneself? And would it violate one’s duty to the system of law?

Starting with the second question, I think the answer is a clear “no.” The reason is that the laws that would punish an innocent family just for their ethnicity are not part of a system of laws that is worth upholding. Insofar as that is true, duty to the system of law doesn’t rule out “lying” to the robot, since that robot is a cog in the wheel of an unjust system.

But what about one’s duty to oneself? Here I confess I would find something degrading about having to lie to a robot. But perhaps that’s an artifact of the situation rather than a moral violation in the act itself.

So—as far as we can tell—what would Kant’s verdict be? Perhaps that it’s okay to tell the robot a falsehood, as long as the reason for which one is doing it is so worthy that you can do it with a clear conscience. In my judgment, saving an innocent family would fit that bill.

Photo by Alessio Ferretti on Unsplash

Comments (3)

UniBomberBear

Saturday, August 15, 2020 -- 11:36 PM

“A truth that's told with bad“A truth that's told with bad intent

Beats all the lies you can invent.” - William Blake -

According to Blake a Truth used for deception is worse than lying.

Tim Smith

Monday, August 17, 2020 -- 6:40 AM

UBB - I think your post hereUBB - I think your post here is worse than a lie. Am I wrong?

The spell is broken.

https://www.thisamericanlife.org/713/made-to-be-broken

It is clearly OK to lie to robots if humanity dictates it. Humanity uber alles.

Harold G. Neuman

Thursday, January 20, 2022 -- 8:59 AM

So, if robots COULD be peopleSo, if robots COULD be people, would it then be ok to lie to them?